pip install overboard

Hi, my name is João F. Henriques. (Sounds a bit like “joo-au” in English.) I like to work in the convex hull of machine learning, deep learning and computer vision. Perhaps my most well-known works are on visual tracking, but I have many favourite topics: friendly AI, robot mapping, meta-learning, continual learning, self-supervised learning and optimisation.

My talented DPhil students:

Marian Longa · Shu Ishida · Andreea Oncescu · Tim Franzmeyer · Dominik Kloepfer · Yash Bhalgat · Shivani Mall · Lorenza Prospero · Daniil Zverev

(Graduated: Xu Ji · Mandela Patrick)

Research

Publications, talks and source-code

Filter by topic

Extracting Reward Functions from Diffusion Models

F. Nuti, T. Franzmeyer, J. F. Henriques

arXiv, 2023

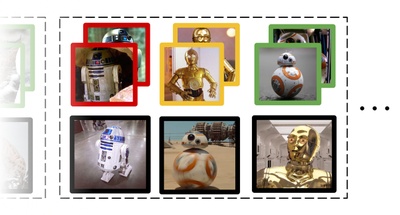

PDF arXivContrastive Lift: 3D Object Instance Segmentation by Slow-Fast Contrastive Fusion

Y. Bhalgat, I. Laina, J. F. Henriques, A. Zisserman, A. Vedaldi

arXiv, 2023

PDF arXivD. Kloepfer, D. Campbell, J. F. Henriques

ICCV, 2023

PDF AppendixN. Nayal, M. Yavuz, J. F. Henriques, F. Güney

ICCV, 2023

PDF Appendix arXivY. Xia, M. Gladkova, R. Wang, Q. Li, U. Stilla, J. F. Henriques, D. Cremers

ICCV, 2023

PDF Appendix arXivY. Bhalgat, J. F. Henriques, A. Zisserman

CVPR, 2023

PDF arXivC. Oncescu, J. Valmadre, J. F. Henriques

Tiny Papers at ICLR, 2023

PDFT. Franzmeyer, P. Torr, J. F. Henriques

Advances in Neural Information Processing Systems, 2022

PDF arXiv

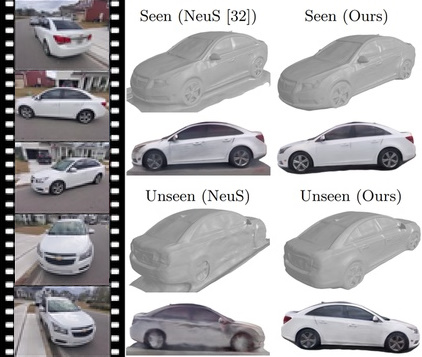

E. Insafutdinov, D. Campbell, J. F. Henriques, A. Vedaldi

ECCV, 2022

We augment neural radiance fields to render views of partially-symmetric objects that are not seen in the data, such as when seeing a car from just one side. Since shadows and reflections break object symmetry, in the process we decompose scenes into geometry, light and material properties.

PDF arXiv

T. Franzmeyer, M. Malinowski, J. F. Henriques

ICLR, 2022

How can autonomous agents help others, including humans, without having exact knowledge of their goals? We explore the concept of increasing others' choice so that they can more easily pursue arbitrary goals, which in some cases even outperforms explicitly cooperative rewards.

PDF arXivAudio retrieval with natural language queries: A benchmark study

A. S. Koepke, A. Oncescu, J. Henriques, Z. Akata, S. Albanie

IEEE Transactions on Multimedia, 2022

Illusionary Attacks on Sequential Decision Makers and Countermeasures

T. Franzmeyer, J. F. Henriques, J. N. Foerster, P. H. Torr, A. Bibi, C. S. de Witt

arXiv, 2022

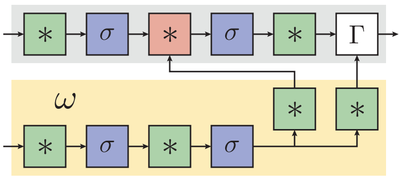

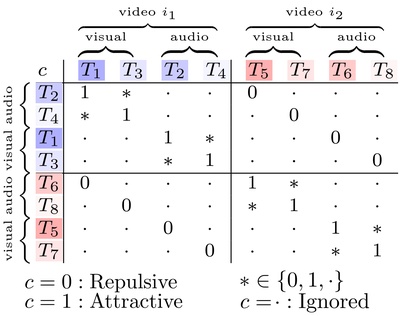

PDF arXivM. Patrick, D. Campbell, Y. M. Asano, I. M. F. Metze, C. Feichtenhofer, A. Vedaldi, J. F. Henriques

NeurIPS, 2021 (oral presentation)

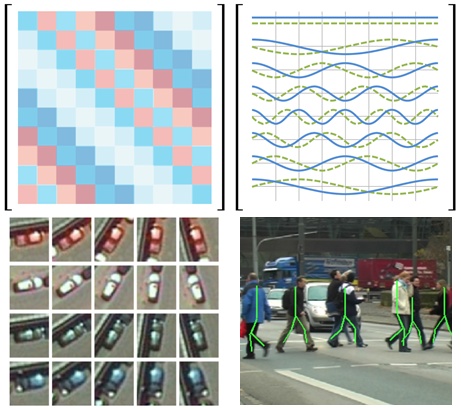

We improve video transformers (e.g. for action recognition) by encouraging attention pooling over motion paths. We also reduce the quadratic computational complexity of attention to linear, with a rigorous probabilistic approximation based on orthogonal prototypes.

PDF arXiv

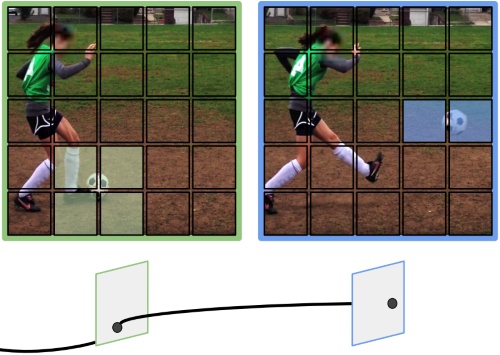

M. Patrick, Y. M. Asano, P. Kuznetsova, R. Fong, J. F. Henriques, G. Zweig, A. Vedaldi

ICCV, 2021

Most contrastive self-supervised methods learn representations that are distinctive to individual examples, and invariant to several other factors. We propose a framework to systematically evaluate valid combinations of distinctive and invariant factors, yielding superior performance in many multi-modal learning tasks.

PDF Code arXivM. Patrick, Y. M. Asano, P. Huang, I. Misra, F. Metze, J. F. Henriques, A. Vedaldi

ICCV, 2021

PDF Code arXivJ. Jiao, J. F. Henriques

BMVC, 2021

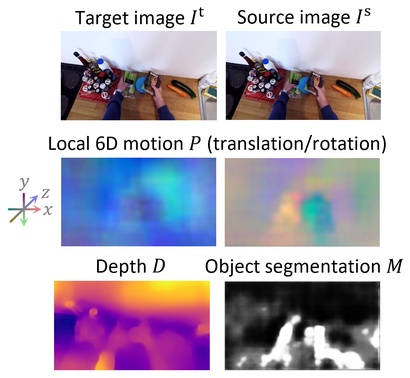

PDFD. Xu, A. Vedaldi, J. F. Henriques

IROS, 2021

A self-supervised network that learns to decompose a video into camera motion, depths, object segmentation, and object motion in 6D (translation and rotation). We do this by assuming a locally-rigid world model in every patch of the video.

PDF arXiv

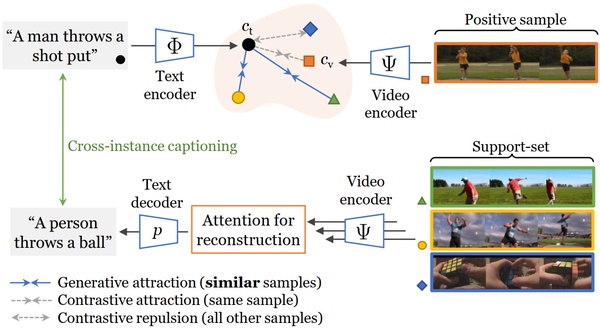

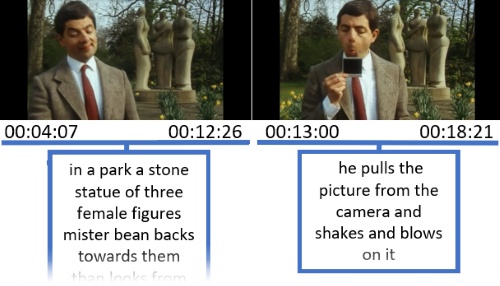

M. Patrick, P. Huang, Y. Asano, F. Metze, A. G. Hauptmann, J. F. Henriques, A. Vedaldi

ICLR, 2021

We investigate noise-contrastive learning of video-text neural networks. We find that learning to reconstruct video captions with video retrieval as a representational bottleneck yields better semantic representations.

PDF arXivA. Oncescu, A. S. Koepke, J. F. Henriques, Z. Akata, S. Albanie

Interspeech, 2021 (nominated for best student paper award)

Creating a content-based audio search engine. Similar to Google Images, but for audio instead.

PDF arXivA. Oncescu, J. F. Henriques, Y. Liu, A. Zisserman, S. Albanie

ICASSP, 2021

A dataset with more than 70 hours of detailed spoken narrations for 200 hours of varied YouTube videos, from more than 1400 volunteer narrators. Text transcriptions are also included. Based on YouDescribe, the goal is to narrate as many videos as possible for the visually impaired, and to facilitate automatic narrations.

PDF Project page with dataset arXiv

X. Ji, J. Henriques, T. Tuytelaars, A. Vedaldi

NeurIPS Workshops, 2020

Avoiding catastrophic forgetting with context-sensitive generative recall, inspired by biological memory.

PDF arXivB. Davidson, M. S. Alvi, J. F. Henriques

ECCV, 2020

PDFP. Martins, J. F. Henriques, J. Batista

IJCV, 2020

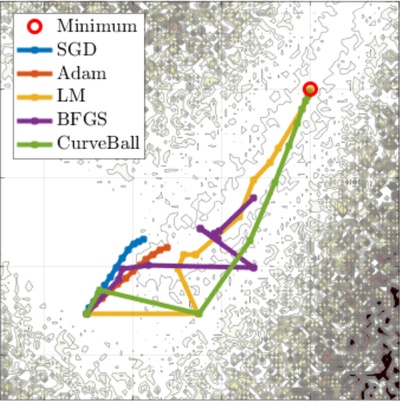

PDFJ. F. Henriques, S. Ehrhardt, S. Albanie, A. Vedaldi

ICCV, 2019

We propose CurveBall, a fast second-order optimizer for deep networks that is simple to implement and does not require hyper-parameter tuning.

PDF Optimisers visualisation (GIF) PyTorch code TensorFlow code Matlab code Slides Appendix arXiv

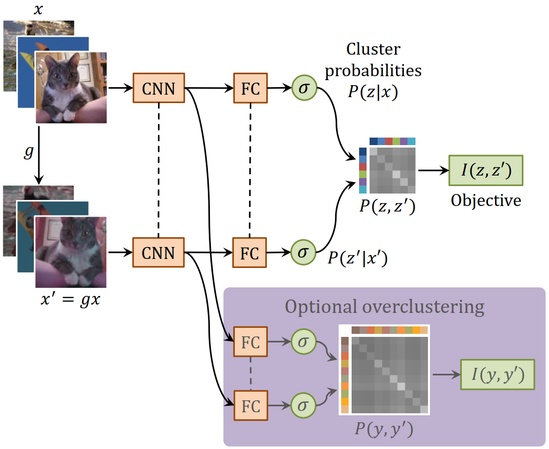

X. Ji, J. F. Henriques, A. Vedaldi

ICCV, 2019

A simple-to-implement mutual information objective that trains deep networks to perform clustering from scratch, with no labels and only one hyper-parameter. Experiments include self-supervised clustering and image segmentation.

PDF PyTorch code arXiv

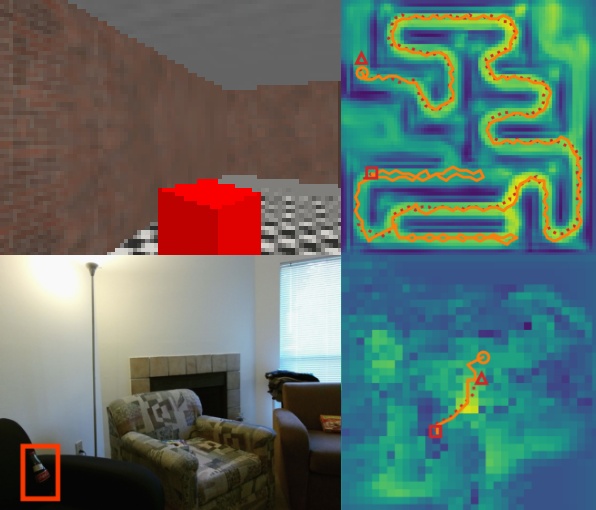

J. F. Henriques, A. Vedaldi

CVPR, 2018 (oral presentation)

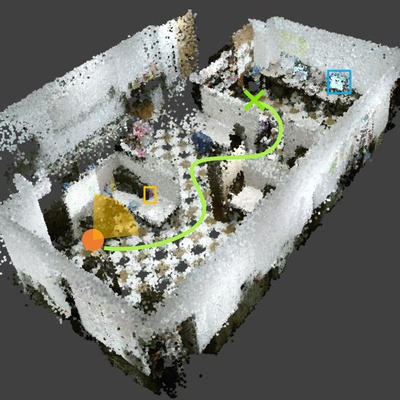

SLAM (Simultaneous Localization And Mapping) is crucial for robotics, but traditional systems cannot improve by learning from data. We propose MapNet, an end-to-end learnable deep network that solves the full SLAM problem, by leveraging efficient operations on a spatial memory.

PDF Blog post Results video (real data) Results video (Doom game) PyTorch code Talk SlidesJ. Valmadre, L. Bertinetto, J. F. Henriques, R. Tao, A. Vedaldi, A. W. Smeulders, P. H. Torr, E. Gavves

ECCV, 2018

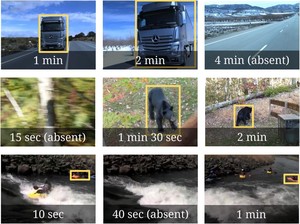

A tracking benchmark with over 14 hours of video, focusing on diverse footage captured "in the wild" and long-term performance.

PDF Project page with dataset

J. F. Henriques, A. Vedaldi

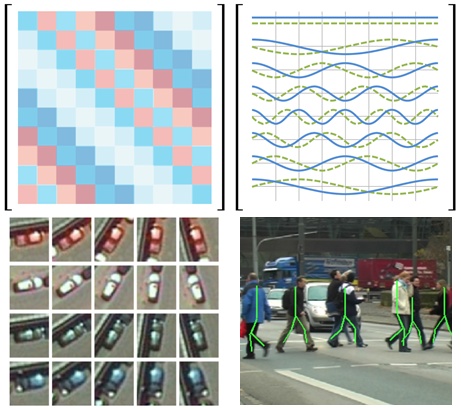

ICML, 2017 (oral presentation)

Convolutions match patterns across translations. We generalize convolutions (and thus CNNs) to work across scaling, rotation and more, including 3D rotations. We show how this generalization can be done with negligible overhead, by performing a single fixed warp before a standard convolution.

PDF Slides (visual explanation) arXiv (proofs variant using simple calculus)

A. Mahendran, H. Bilen, J. F. Henriques, A. Vedaldi

arXiv, 2017

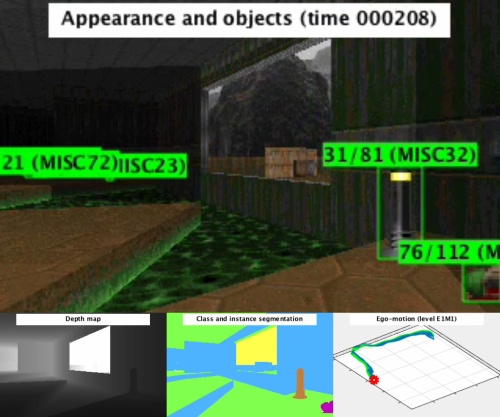

A large dataset in the COCO format with object class/instance bounding boxes and segmentation masks, as well as depth and egomotion, extracted from speedruns of the videogame Doom.

PDF Dataset Code arXivJ. F. Henriques

PhD thesis, 2016

My thesis, contains a tutorial introduction to circulant matrices and Fourier methods for machine learning.

PDF

P. Martins, J. F. Henriques, R. Caseiro, J. Batista

TPAMI, 2016

PDF Video

L. Bertinetto, J. Valmadre, J. F. Henriques, A. Vedaldi, P. H. S. Torr

ECCV Workshops, 2016

The SiamFC tracker, one of the fastest visual trackers based on deep networks. This basic architecture has been used in numerous follow-up works, and commercially-deployed systems.

PDF Project page Code Short talk arXivJ. F. Henriques, R. Caseiro, P. Martins, J. Batista

TPAMI, 2015

The KCF, an extremely fast visual tracker (hundreds of frames-per-second), especially suited for resource-constrained devices. It relies on the Fast Fourier Transform, with online learning based on the theory of circulant matrices.

PDF Matlab code C++ code (official OpenCV class, single-scale) C++ code (multi-scale) C++ code (streamlined) arXiv

R. Caseiro, P. Martins, J. F. Henriques, J. Batista

CVPR, 2015

PDF

J. F. Henriques, P. Martins, R. Caseiro, J. Batista

NeurIPS, 2014

PDF Appendix A Appendix B (with code)P. Martins, R. Caseiro, J. F. Henriques, J. Batista

ICIP, 2014 (top 10% of accepted papers)

PDF Video arXivR. Caseiro, P. Martins, J. F. Henriques, J. Carreira, J. Batista

CVPR, 2013 (oral presentation)

PDFJ. F. Henriques, R. Caseiro, P. Martins, J. Batista

ECCV, 2012

The first tracker based on circulant matrices. A more recent version is the KCF.

PDF Video Matlab code Python code Java code Appendix B Appendix C PosterR. Caseiro, J. F. Henriques, P. Martins, J. Batista

ECCV, 2012

PDFP. Martins, R. Caseiro, J. F. Henriques, J. Batista

ECCV, 2012

PDF VideoP. Martins, R. Caseiro, J. F. Henriques, J. Batista

BMVC, 2012 (oral presentation)

PDF VideoR. Caseiro, P. Martins, J. F. Henriques, J. Batista

Pattern Recognition, 2012

PDFJ. F. Henriques, R. Caseiro, J. Batista

ICCV, 2011 (oral presentation)

PDF Results video Talk (part 1) Talk (part 2) Slides

R. Caseiro, J. F. Henriques, P. Martins, J. Batista

ICCV, 2011

PDFJ. F. Henriques, R. Caseiro, J. Batista

ICIP, 2010

PDFR. Caseiro, J. F. Henriques, J. Batista

ICIP, 2010

PDFMore

Research-related

Workshops on Preregistration

An alternative publication model for machine learning research

Preregistration separates the generation and confirmation of hypotheses:

There are several advantages in this model: 1) A healthy mix of positive and negative results; 2) Reasonable ideas that don’t work still get published, avoiding wasteful replications; 3) Papers are evaluated on the basis of scientific interest, not whether they achieve the best results; 4) It is easier to plan research; and 5) results are statistically stronger. Check the pages below for more information, including talks and preregistered machine learning papers.

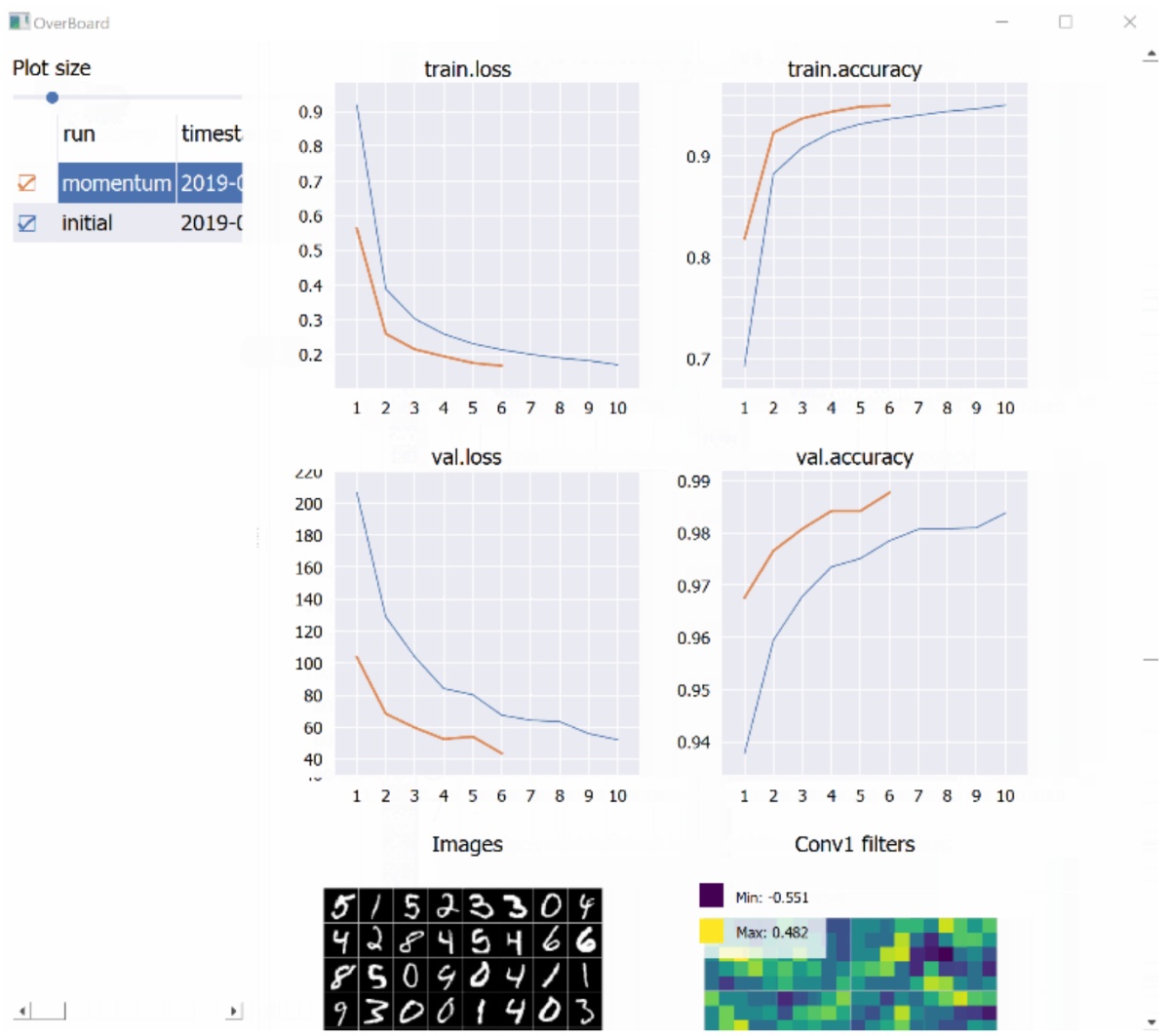

OverBoard

A pure Python dashboard for monitoring deep learning experiments

OverBoard is a lightweight yet powerful dashboard to monitor your experiments. It includes:

conda install pyqt pyqtgraph -c anaconda) and Python 3.

Fun

Not mutually-exclusive with research

S. Albanie, L. Momeni, J. F. Henriques

SIGBOVIK, 2023

Our research team jumps on the LLM bandwagon, scoops in hand. This article was 100% produced by free-range humans.

PDF arXivS. Albanie, D. Campbell, J. F. Henriques

SIGBOVIK, 2022

A deep dive into the murky waters of AI future prediction and the legalities of scaling laws.

PDF arXivS. Albanie, E. Lu, J. F. Henriques

SIGBOVIK, 2021

Inspired by the squishy sciences, we embark on a perilous journey to explain today's Cambrian explosion in self-supervised methods.

PDF arXivS. Albanie, J. Thewmore, R. McCraith, J. F. Henriques

SIGBOVIK, 2020

A way to fast-forward through arXiv submissions, and the artistic value of taping edible objects to a wall (gallery included).

PDF arXivS. Albanie, J. Thewlis, S. Ehrhardt, J. F. Henriques

Narrowly missed SIGBOVIK, 2019

The most end-to-end network ever proposed, and a sunnier alternative to cloud computing. Narrowly missing the deadline for SIGBOVIK 2019, received the Most timely paper

award at SIGBOVIK 2020.

S. Albanie, J. Thewlis, J. F. Henriques

SIGBOVIK, 2018

Includes a discussion of ruthless edu-tech business tactics, and baking cherry cakes with a fellow whose name rhymes with quelqu'un.

PDF arXiv Code SIGBOVIK ReviewsS. Albanie, S. Ehrhardt, J. F. Henriques

SIGBOVIK, 2017

An attempt to end the madness of pitting network-against-network (GAN training). This paper achieved moderate success on social media, which meant that all subsequent papers were doomed to obscurity (but that didn't stop us).

Surprisingly, there is an entirely serious paper that experiments with generative unadversarial training

and credits our joke paper as the inspiration! (With full knowledge that it is not to be taken seriously of course.) Mission accomplished.